Chapter 6: Main Memory

Chapter 6: Memory Management

- Background

- Swapping

- Contiguous Memory Allocation

- Paging

- Structure of the Page Table

Objectives

- To provide a detailed description of various ways of organizing memory hardware

- To discuss various memory-management techniques, including paging and segmentation

Background

- Program must be brought (from disk) into memory and placed within a process for it to be run

- Main memory and registers are only storage CPU can access directly

- Register access in one CPU clock (or less)

- Main memory can take many cycles

- Cache sits between main memory and CPU registers

- Protection of memory required to ensure correct operation

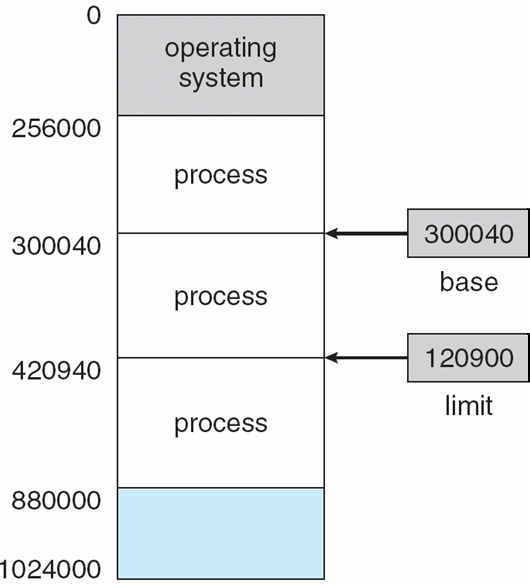

Base and Limit Registers

A pair of base and limit registers define the logical address space

Base register - Specifies the smallest legal physical memory address.

Limit register: Specifies the size of the range.

A pair of base and limit registers specifies the logical address space.

The base and limit registers can be loaded only by the operating system.

- Ex: If the base register holds 300040 and the limit register is 120900, then the program can legally access all addresses from 300040 through 420939 (inclusive).

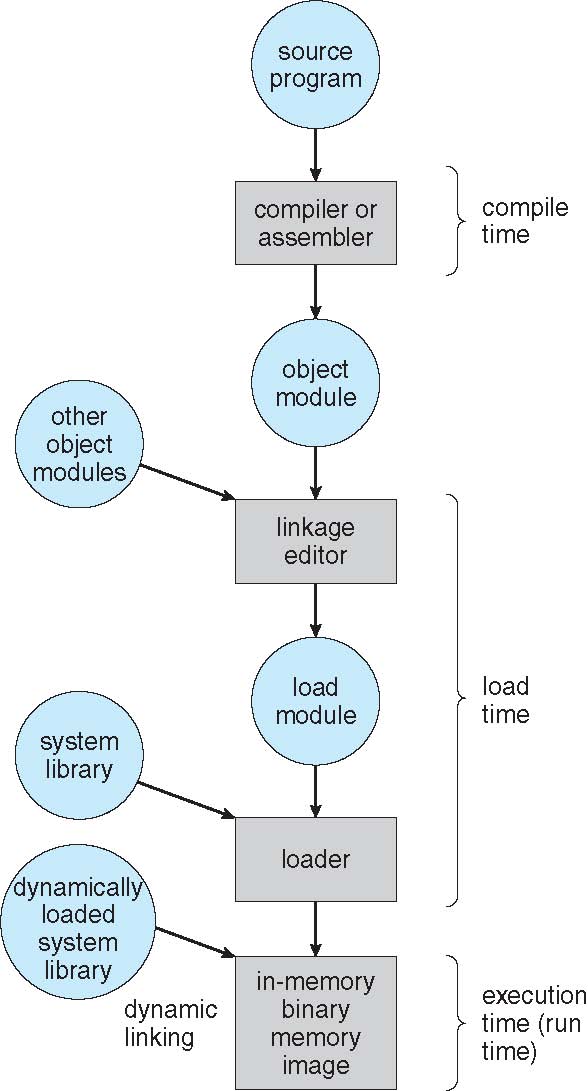

Binding of Instructions and Data to Memory

- Address binding of instructions and data to memory addresses can happen at three different stages

- Compile time: If memory location known a priori, absolute code can be generated; must recompile code if starting location changes

- Load time: Must generate relocatable code if memory location is not known at compile time

- Execution time: Binding delayed until run time if the process can be moved during its execution from one memory segment to another. Need hardware support for address maps (e.g., base and limit registers)

Final exam will come out

https://www.javatpoint.com/address-binding-in-operating-system

Multistep Processing of a User Program

Logical vs. Physical Address Space

- The concept of a logical address space that is bound to a separate physical address space is central to proper memory management

- Logical address – generated by the CPU; also referred to as virtual address

- Physical address – address seen by the memory unit

- Logical and physical addresses are the same in compile-time and load-time address-binding schemes; logical (virtual) and physical addresses differ in execution-time address-binding scheme

Memory-Management Unit (MMU)

- Hardware device that maps virtual to physical address

- In MMU scheme, the value in the relocation register is added to every address generated by a user process at the time it is sent to memory

- The user program deals with logical addresses; it never sees the real physical addresses

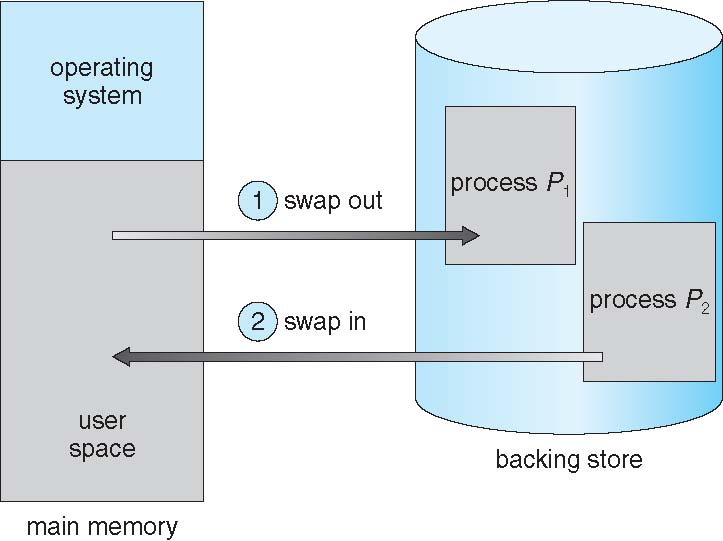

Swapping

- A process can be swapped temporarily out of memory to a backing store, and then brought back into memory for continued execution

- Backing store – fast disk large enough to accommodate copies of all memory images for all users; must provide direct access to these memory images

- Roll out, roll in – swapping variant used for priority-based scheduling algorithms; lower-priority process is swapped out so higher-priority process can be loaded and executed

- Major part of swap time is transfer time; total transfer time is directly proportional to the amount of memory swapped

- Modified versions of swapping are found on many systems (i.e., UNIX, Linux, and Windows)

- System maintains a ready queue of ready-to-run processes which have memory images on disk

Schematic View of Swapping

Dynamic Storage-Allocation Problem

How to satisfy a request of size n from a list of free holes

- First-fit : Allocate the first hole that is big enough

- Best-fit : Allocate the smallest hole that is big enough; must search entire list, unless ordered by size

- Produces the smallest leftover hole

- Worst-fit : Allocate the largest hole; must also search entire list

- Produces the largest leftover hole

First-fit and best-fit better than worst-fit in terms of speed and storage utilization (according to simulations)

https://www.tutorialspoint.com/operating_system/os_memory_allocation_qa2.htm

Fragmentation

- Memory fragmentation is a phenomenon that occurs in computer memory systems where available memory becomes divided into small, non-contiguous blocks. This division can occur in two primary forms: external fragmentation and internal

- External Fragmentation: This happens when free memory blocks are scattered throughout the memory space. While there may be sufficient total memory, the system cannot allocate it to processes because the free memory is not contiguous. As a result, you may have enough memory to meet a process’s needs but be unable to allocate it because there is no single continuous block available.

- Internal Fragmentation: This occurs when memory is allocated to a process, but the allocated memory block is larger than what the process actually needs. As a result, some memory within the block goes unused, leading to inefficient memory utilization..

To mitigate memory fragmentation, memory management techniques are employed, including:

- Memory Compaction: This involves relocating processes in memory to compact available free space and eliminate external fragmentation. It’s more common in systems with dynamic memory allocation.

- Dynamic Memory Allocation Algorithms: These algorithms aim to minimize fragmentation by allocating memory in a way that reduces the likelihood of fragmentation. Examples include the Buddy System and First-Fit, Best-Fit, and Worst-Fit allocation strategies.

- Memory Pools: Some systems allocate memory in pools, where memory is pre-allocated in fixed-sized blocks. This can reduce fragmentation and improve efficiency, especially for processes with similar memory requirements.

- Garbage Collection: In managed programming languages like Java or C#, garbage collectors help manage memory and can reduce the impact of fragmentation by reclaiming unused memory.

Paging

- Logical address space of a process can be noncontiguous; process is allocated physical memory whenever is available

- Divide physical memory into fixed-sized blocks called frames (size is power of 2, between 512 bytes and 8,192 bytes)

- Divide logical memory into blocks of same size called pages

- Keep track of all free frames

- To run a program of size n pages, need to find n free frames and load program

- Set up a page table to translate logical to physical addresses

- Internal fragmentation

Implementation of Page Table

- Page table is kept in main memory

- Page-table base register (PTBR) points to the page table

- Page-table length register (PRLR) indicates size of the page table

- In this scheme every data/instruction access requires two memory accesses. One for the page table and one for the data/instruction.

- The two memory access problem can be solved by the use of a special fast-lookup hardware cache called associative memory or translation look-aside buffers (TLBs)

- Some TLBs store address-space identifiers (ASIDs) in each TLB entry – uniquely identifies each process to provide address-space protection for that process